Ever since I did my masters’ in marketing analytics ten years ago revenue per employee has been one of the primary metrics I use to evaluate businesses.

I’ve found it to be a useful gauge of potential clients, employers, investments and high performing businesses both doing research and during my career.

As a metric, this ratio shows both the density of talent in a company and the strength of its business model—the profitability of the market it is operating in.

I don’t know why it’s not more of a fixture in everyday business conversations.

There is still an overweight focus on headcount as a measure of success.

Growth is too often equated with hiring—with increasing the roster.

I think this is an outdated, 20th century way of thinking about business. One that doesn’t take into account the AI economics that are in play today.

So when

of Swan AI wrote a guest post about using ARR/FTE as their companies’ North Star metric in the latest edition of the New Economies Substack, I was evidently triggered:In 2020, reaching $30M ARR meant building a 250-person company. In 2025, AI-native businesses are hitting the same milestone with just 3 operators. This isn't just about doing more with less—it's about a fundamental shift in how companies scale.

—Amos Bar Joseph

I really love Amos’ concept of Autonomous Businesses (go check out his newsletter if you can!), but I also think he’s making it sound more complicated than it actually is.

In fact, you don’t need to be an AI startup to leverage AI effectively.

All it takes is the will to get started, to make mistakes, and learn from them.

As long as you treat AI as a core business strategy you are directionally correct.

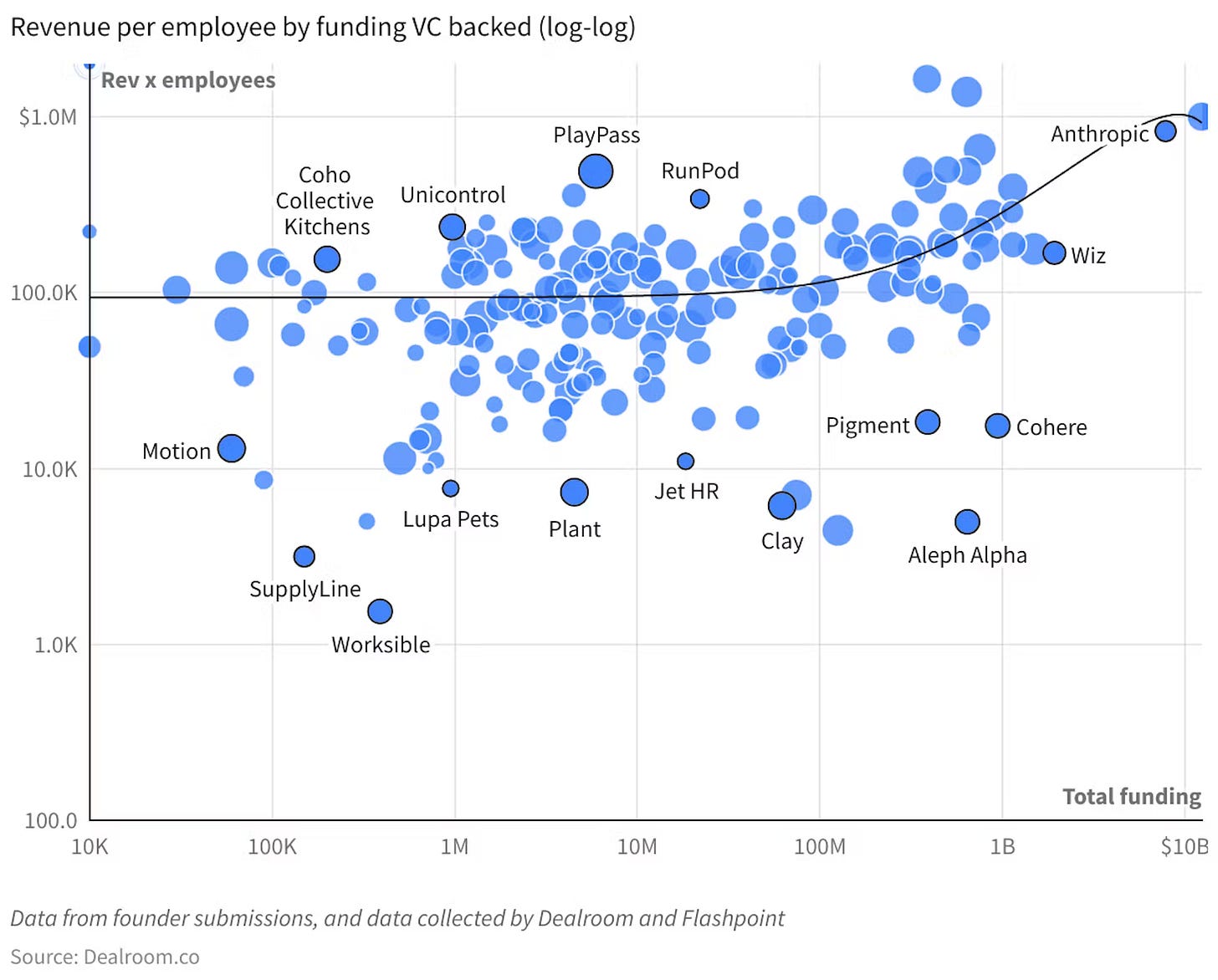

The gap between AI-native and traditional SaaS is growing every day

The difference in performance is most evident in AI startups, that are hitting ARR/FTE ratios that are 15-25x better than that of their peers!

Over the past two decades, startup efficiency has increased dramatically. In the early 2000s, companies like LinkedIn needed nearly 900 employees to hit $100M ARR. By the 2010s, product-led growth reduced that number to around 250 employees for companies like Slack. Today, AI-native startups have slashed that ratio to just 0.2 employees per million dollars in ARR, a 15-25x improvement in efficiency.

—Marjolaine Catil, Newfund Management

One of the key differentiators driving this efficiency gap is that the funds AI startups raise is spent on improving the product on sale—by hiring key people and building AI infrastructure to drive further efficiency gains.

That is, if they even need to raise.

A lot of them are massively successful and could bootstrap if needed.

Contrast this to previous iterations of VC-led SaaS growth where most of the money raised from investors would be burnt on marketing and sales.

And while some traditional SaaS companies like Salesforce and Microsoft* are waking up to AI economics, the question is if they will be able to adapt fast enough to catch up with companies that have been built from the ground up to be automation-first AI-native businesses.

*) Microsoft’s May 2025 round of layoffs seems to target middle management in an effort to improve the ratio of engineers to managers.

Blueprint for an automation-first business

Because the great thing is that AI economics apply to most business models.

You don’t need to be in a high-tech industry to reap the benefits.

In fact, traditional services industries with outdated SaaS products can be much more interesting from a financial perspective!

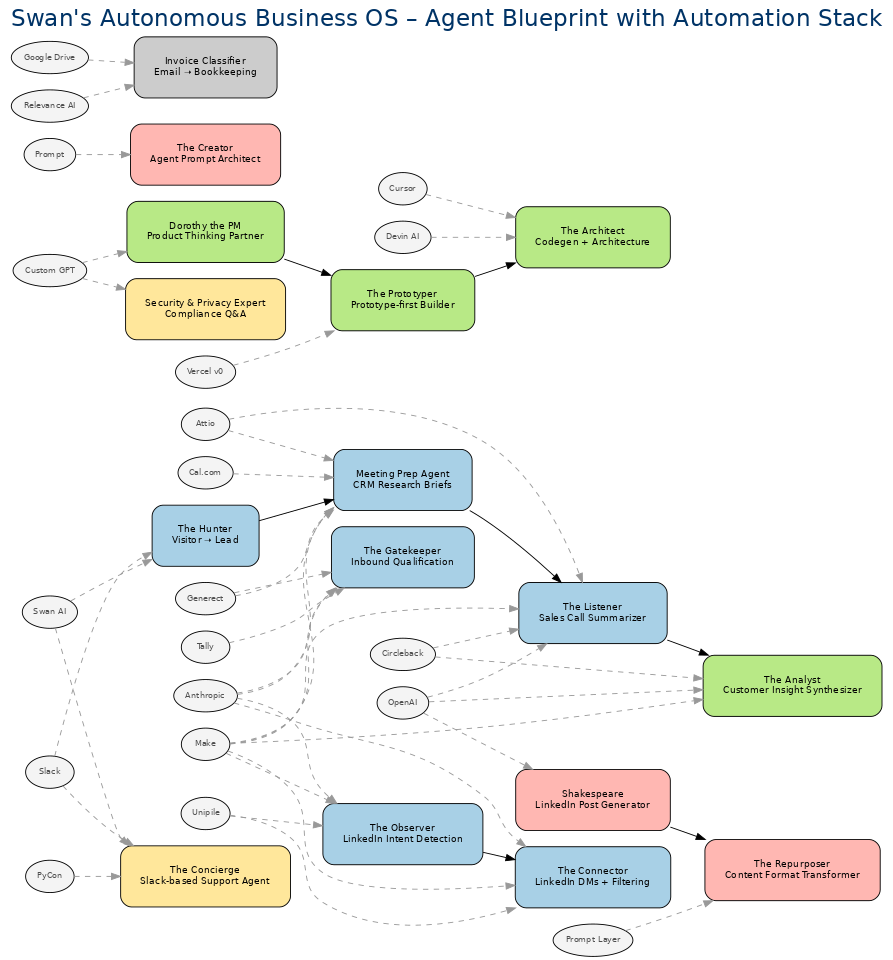

Let’s see what this looks like for Swan AI, since they have been so gracious to publish their automation blueprints online through their Autonomous Business OS GPT:

These ~10 AI agents have allowed them to scale to USD 30M with 3 operators.

And most of their stack is no-code—no software engineering team involved:

They use Make.com (affiliate link) to orchestrate most automations, which is a no-code automation platform with integrations with most of the SaaS tools they use. Using an automation platform like Make.com or n8n lets you leverage existing tooling without the need to build your own integrations.

They leverage LLMs from OpenAI and Anthropic to do most of the heavy lifting in customer support, content creation, sales and product management, crafting them into AI agents that are tailored to their specific business processes.

Their AI automation systems are set up in such a way that the humans in the loop can focus their time on the things humans do best—talk to other humans, set strategic direction, and iteratively improve system performance.

The only place they do use code is in their own SaaS product—an omni-channel CRM system. But even here, most of the code is generated by AI agents in Cursor and Devin.

Applying AI economics to their operations has allowed them to scale to several million in ARR with a team of 3 FTE (>USD 800k / FTE), and they are on track to hit their North Star target of USD 10M per FTE in the next couple of years!

If that isn’t grounds to rethink your business operations I don’t know what is..

Want to become an AI operator yourself?

Start today by joining my free 5-day email course to learn the basics of AI automation:

Last week in AI

AI coding updates: Cursor releases a new tab model built in-house that is faster, cheaper, and better than most commercial LLMS. Windsurf unveiled their SWE-1 AI engineer, and OpenAI rolled out their own AI engineer, Codex.

Google DeepMind introduced AlphaEvolve, a new coding agent designed to discover and create more efficient algorithms. This AI agent has already demonstrated its capabilities by improving the efficiency of Google's data centers, contributing to chip design, and speeding up AI training processes.

Tokyo-based Sakana AI introduced the Continuous Thought Machine (CTM), a novel AI model architecture inspired by biological neural networks and the way the brain processes time. Unlike traditional models, CTM uses the synchronization of neuron activity for reasoning, allowing it to "think" through problems step-by-step, which enhances interpretability.

ByteDance researchers open sourced Deer Flow, a deep research project on Github that allows you to customise your own deep research agents with MCP servers and your own choice of AI models.

OpenAI introduced HealthBench, a specialized benchmark to assess the performance and safety of large language models (LLMs) in various healthcare applications, such as medical question answering and data summarization. This tool provides a standardized evaluation framework, helping developers and healthcare professionals gauge the reliability of LLMs for medical use cases.

A new paper from Microsoft Research and Salesforce Research reveals that even top Large Language Models (LLMs) perform significantly worse in multi-turn conversations than in single-turn, fully-specified instruction settings, with an average performance drop of 39% across various tasks. This "lost in conversation" phenomenon occurs because LLMs tend to make early assumptions, prematurely attempt final solutions, and then overly rely on these initial, often incorrect, attempts without recovering from their mistakes.

Another look at this trend is to see how funding models are evolving and what type of capital is deployed to create growth, as is done in this excellent analysis https://open.substack.com/pub/matttbrown/p/vc-pe-envy?utm_source=share&utm_medium=android&r=3tyiu7