Coding Is Solved. What's Next?

What a million AI conversations reveal about the future of work

As of early 2026, AI coding is a solved problem.

Claude Code went from research preview to a billion-dollar product in just six months.

GitHub Copilot has 1.8 million paying subscribers.

The latest AI models can autonomously fix 80% of real software bugs.

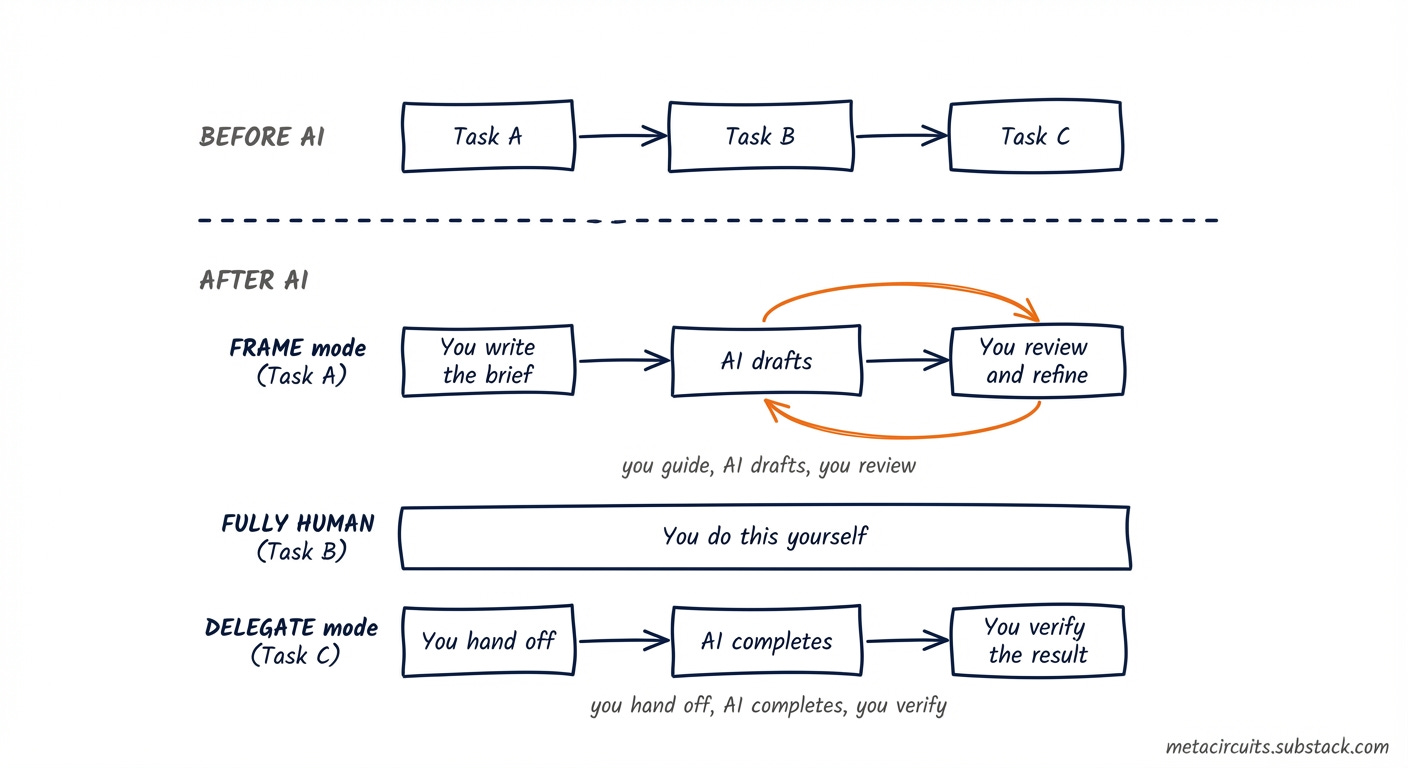

Three weeks ago I argued that delegating tasks to AI is the new default way of work.

Coding proved it first.

This doesn’t mean that all software engineers will soon be out of a job.

It does mean they need to keep up and start adopting AI into their day-to-day.

Anyone still typing out every line of code by hand will be at a massive disadvantage—engineers using AI coding tools complete coding tasks roughly 30-60% faster.

It made me wonder, what is the next sector to experience this kind of transformation?

I looked for answers in a dataset most people aren’t looking at: the Anthropic Economic Index, which maps a million real AI conversations to 3,500 individual tasks.

Forget jobs—think tasks

Because in AI-and-jobs debate, tasks are the correct the unit of analysis.

AI doesn’t replace “software developers.” It handles specific tasks—like “fix bugs in existing software” (4.8% of all conversations)—much better and faster than humans can.

Likewise, AI doesn’t replace “administrative assistants.” It just does “draft and refine business emails” much more efficiently than a human typing on a keyboard.

And AI doesn’t replace “consultants.” But it does “structured analysis” more systematically and thoroughly than can be realistically expected from any human.

When you look at AI usage mapped to 3,500 individual work tasks, the picture is clear: AI is extraordinarily capable at specific tasks, but unable to do complete jobs.

And what is even more interesting is how extreme the differences between task performance and adoption rates are.

The top 100 tasks in the Anthropic Economic Index data in total account for half of all AI usage categorized by their researchers. The other 3,400 tasks barely register.

This matters for your business, because instead of worrying about whether or not AI will replace you or your team, you should start to think about which parts of your work you should do yourself—and which parts should be delegated to AI assistants.

The numbers that cuts through the noise

There’s one data point, highlighted by Epoch AI, that explains the gap between AI hype and AI-at-work reality better than anything else.

It’s a tale of three AI benchmarks, with tasks at three levels of realism.

On clean, isolated expert tasks—the kind you see in demos—AI scores 74%.

But on multi-step professional tasks performance drops to 30%.

And on actual freelance projects pulled from Upwork it scores just 3.75%.

74% on specific tasks—that take humans an average of 7 hours to complete.

30% as an all-round assistant—on tasks that take humans 2 hours on average.

3.75% on-the job performance—on tasks that take humans roughly 29 hours.

What also stands out is the amount of time saved:

The Anthropic data shows that the average AI-assisted task takes 15 minutes instead of 3 hours, with an average 67% success rate—that’s a 12x speedup!

Which means that AI is compressing routine parts of a lot of jobs fast enough that even with a one-in-three correction rate, the math still works.

What changes next

Which brings me back to the question—if coding fell first, which sectors will follow?

Data and analyses from Anthropic, NBER, Google, OpenAI, Microsoft, WEF, Wharton, Goldman Sachs, Gartner and Eurostat point to three sectors.*

Office and admin work is the most exposed.

Google Gemini alone edited 1.4 billion documents in the first half of 2025 and now auto-summarizes 78% of Google Meet sessions. Microsoft Copilot handles email drafts, meeting recaps, and spreadsheet work across 15 million enterprise seats.

Data entry, transcription, and routine correspondence are being automated across all major platforms simultaneously. This also includes a lot of customer service work—where autonomous voice agents are improving fast.

So if you or your team spend hours on meeting notes, email drafts, or organizing and summarizing information—it’s probably time to start delegating today.

Professional services—consulting, legal, accounting—are next in line.

AI drafts legal briefs, generates financial models, and produces research summaries at 5-10x the speed of a junior professional. But here’s the catch: on multi-step consulting and legal tasks, AI right now only succeeds about 30% of the time. Good enough to accelerate the work, nowhere near good enough to full-on replace professionals.

Financial services are another big candidate, although the full impact probably won’t be seen until late 2027 due to the complexities and regulations involved.

AI screens for fraud, monitors compliance, and drafts financial analysis. Healthcare and finance showed 3.4x growth in Google Gemini adoption in 2025, driven by documentation, compliance monitoring, and data synthesis.

Notice the pattern.

In every sector, AI takes over more and more of the execution layer—the drafting, the summarizing, the analyzing, the researching and so on.

The human stays in the loop for judgment, client relationships, and final decisions.

Where “good enough” is good enough

Which should honestly come as a relief to most of us.

Because for many of the tasks we are already handing over to AI agents, the 10% improvement from getting a human involved add almost nothing.

An AI-drafted internal email that’s 90% as good as what you’d write yourself? Economically identical—nobody reads operational emails for their literary appeal.

An AI meeting summary that captures only 90% of the nuances? Junior team members will be relieved they no longer need to do that chore.

An AI translation of an internal document? Now it takes a couple of seconds instead of two weeks of back and forth with a translation bureau.

So even when AI gets it wrong a third of the time (66.9% success rate), for a lot of tasks the 12x speed advantage dwarfs the cost of occasional human corrections.

Where being human is the product

Then there’s a category of work where humans don’t just add value but are the product.

If you’re a consultant, a coach, an advisor, or a founder—this is your territory.

Call it human alpha:

Your judgment under ambiguity. When a client comes to you with a messy situation, they’re paying for your read on the situation. AI can summarize the data, but it can’t tell your client which strategic bet to make. The 74% vs 3.75% gap exists precisely because real work is ambiguous—and handling ambiguity well is what professionals get paid for.

Your accountability. AI can draft the proposal, the analysis, the plan. But someone has to stake their reputation on it. In any domain where someone needs to be held responsible for the outcome, the human isn’t optional.

Your relationships. Client management, negotiation, trust-building—the data shows these are the tasks with the lowest AI automation potential of any category (4.4%). Your clients work with you because they trust you, not because of your email writing skills.

Your ability to frame the right problem. AI can solve problems. But you decide which ones matter. This is the most underrated skill in an AI-augmented world: looking at a messy situation and knowing what to focus on.

Here’s a finding from the AEI data that validates this: there’s a near-perfect statistical correlation (r > 0.92) between the expertise needed to write the prompt and the quality of AI’s response.

You need to understand the work to be able to delegate it effectively.

AI doesn’t reduce the need for expertise—it just shifts where and how that expertise gets applied, and shifts it from execution to direction and evaluation.

This is exactly what my AI Operators participants discover in our sessions: AI is powerful at execution, but you still need to be the architect of your workflows.

Your value shifts from doing to directing.

The supervision tax

One more number.

Anthropic researchers found that AI errors and the resulting human corrections cut expected economic productivity gains nearly in half.

A estimated AI productivity boost of 1.8 percentage points per year falls to about 1.0 when the time we spend reviewing, correcting, and refining AI output is factored in.

As mentioned, one in three AI task completions still need correction.

This is the supervision tax—and it’s one of the reasons the macro data doesn’t show AI’s impact yet after three years of ChatGPT, 900 million weekly users, and $2 trillion in global AI spending.

The task-level data explains why.

AI transforms specific tasks at dramatic speed—12x faster, 100x cheaper, 10x better.

But the supervision tax (plus the time it takes organizations to adopt and adapt to new ways of working) is still absorbing a lot of the theoretical productivity gains.

If you’ve ever spent 20 minutes fixing an AI draft and thought “I could have just written this myself”—you’ve experienced the supervision tax firsthand.

The key is knowing which tasks are worth delegating despite the tax (most operational tasks) and which ones aren’t (anything client-facing that requires your judgment).

The window

All of this points to a specific window in which the economics favor early movers.

That 18 month window still gives you some time to build your systems without panic.

But the task-level transformation is already here.

Coding crossed first.

Admin work and professional services are crossing this year.

The people and organizations that learn to decompose their work into tasks—identify which ones AI handles well, build review processes around the rest—will compound advantages that will get harder and harder to catch up to.

The meta-skill isn’t mastering a specific AI tool. It’s learning to delegate effectively.

As I wrote three weeks ago: the real cost is rapidly shifting from execution—the how—to knowing what to build.

And this shift is happening across every knowledge-intensive profession.

Building your own AI operating system? AI Operators is my 4-week 1-on-1 coaching program where we map your workflows, identify which tasks to delegate, and build the automation together. No technical background needed. Reply to this email to see if it’s a fit.

Last Week in AI

Anthropic closed a $30 billion Series G round led by GIC and Coatue, valuing the company at $380 billion post-money. The company says its run-rate revenue is $14 billion, growing over 10x annually for each of the past three years. The round follows Opus 4.6’s launch and the release of the Cowork plugins that triggered the “SaaSpocalypse” in enterprise software stocks the previous week. Anthropic also quietly announced it will cover electricity price increases for communities near its data centers—a tacit acknowledgement that the infrastructure demands of frontier AI are becoming a political liability, not just an engineering challenge.

Google released a major upgrade to Gemini 3 Deep Think, its specialized reasoning mode built for open-ended scientific and engineering problems where data is messy and solutions are unclear. The results are striking: 48.4% on Humanity’s Last Exam (without tools), 84.6% on ARC-AGI-2, and Legendary Grandmaster status on Codeforces. Researchers have already used Deep Think to identify logical flaws in peer-reviewed papers, optimize crystal growth processes, and convert hand-drawn sketches into 3D-printable models. Deep Think is now available to Google AI Ultra subscribers and, for the first time, via the Gemini API to select researchers and enterprises.

Z.ai (formerly Zhipu AI) launched GLM-5, a 744B parameter foundation model (40B activated) that claims coding performance on par with Claude Opus 4.5 and state-of-the-art agentic capabilities among open-weight models. GLM-5 was built for what Z.ai calls “Agentic Engineering”—long-horizon agent tasks like backend architecture refactoring, complex debugging, and multi-step tool orchestration.

NVIDIA published production data showing inference providers cutting token costs by up to 10x on Blackwell GPUs compared to Hopper, with the savings coming from combining Blackwell hardware, optimized software stacks, and the switch from proprietary to open-source models. The case studies are concrete: Sully.ai cut healthcare inference costs by 90% while improving response times by 65%, DeepInfra reduced gaming costs from 20 cents to 5 cents per million tokens using NVFP4 precision, and Decagon’s customer service voice AI dropped cost per query by 6x. Open-source models running on Blackwell infrastructure are now price-competitive with closed-source alternatives at comparable quality, fundamentally changing the build-vs-buy calculus for production AI.

The UN General Assembly approved a 40-member scientific panel to assess the impacts and risks of artificial intelligence, passing with 117 votes in favor over US objections. The panel, which will serve a three-year term, is the first formal international body tasked with providing scientific assessments of AI.

*) The full 33-page report with all sources and data points is available for download to paid subscribers of The Circuit on the AI OS hub.

The supervision tax, just 1% net productivity boost with AI when factoring correction was an unexpected stat.

Thinking about it, it makes sense even in my use cases, but I guess it cuts down the boring work and I focus more on thinking and evaluating.

I completely agree the important meta skill is now effective delegation, and that itself keeps shifting by the day with new models and tool launches.