It’s funny how I’m using deep research in Perplexity Pro on a daily basis, even though two months ago “deep research” didn’t exist in any consumer product.

After OpenAI released “Deep Research for ChatGPT” on February 2nd of this year, Perplexity and Google scrambled to add their own versions to their AI assistants.

Perplexity in this case got lucky—and me as a Pro user in turn—because DeepSeek had just released R1, which powers most of the heavy lifting in Perplexity’s DR feature.

This means (in case you’re wondering) no daily limits on DR queries in Perplexity Pro.

But that’s not what I wanted to talk about today.

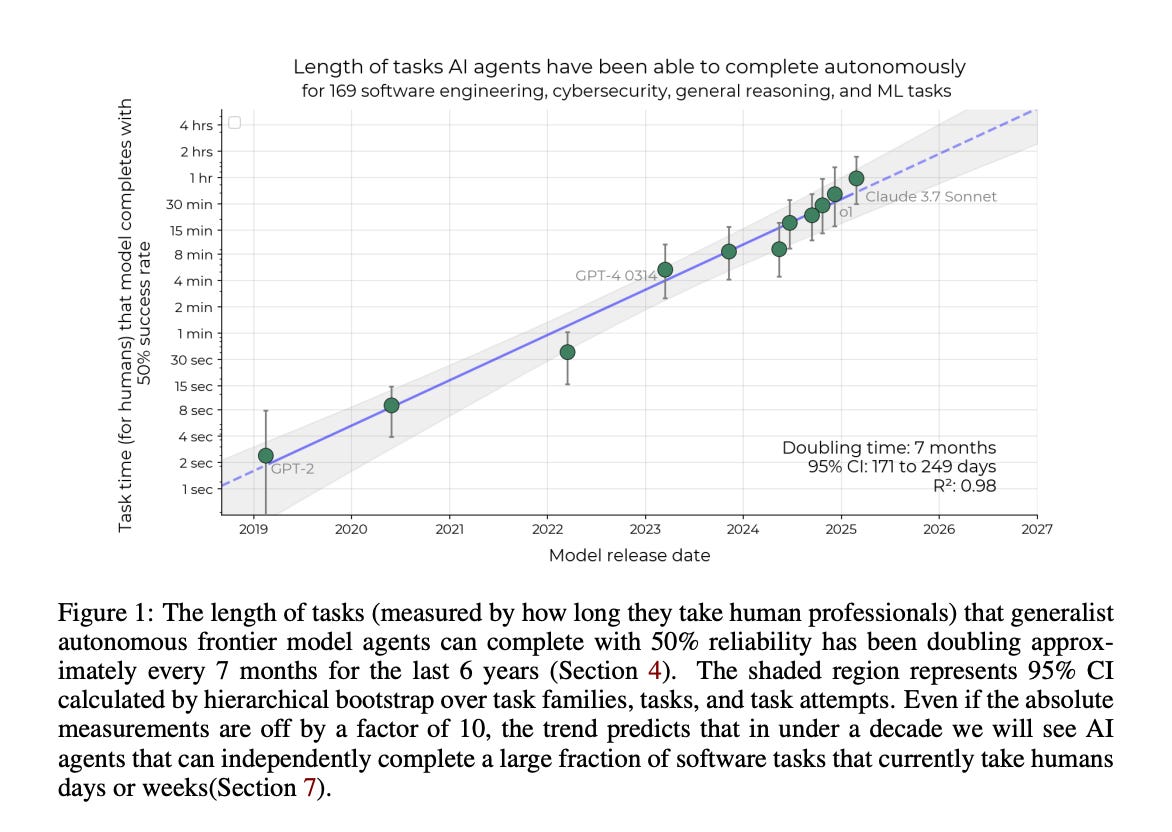

This week the team at METR (Model Evaluation and Threat Research, a non-profit AI research group) released a research paper in which they plotted the evolution of LLM reasoning capabilities in terms of the length at which an AI system can work on a task autonomously over time.

Here is one of the key plots in their research paper:

As can be seen, we’re looking at some serious progress from GPT-4, released in early 2023 (~5m of “autonomous” work), to Claude 3.7’s ~1 hour of “autonomous” work.

In risk of pointing out the obvious, this is a key datapoint for anyone tracking the capabilities of AI agents, agentic AI workflows and AI software.

As base AI systems are becoming more powerful, they will be able to facilitate longer and more complex workflows, taking on higher-level cognitive work.

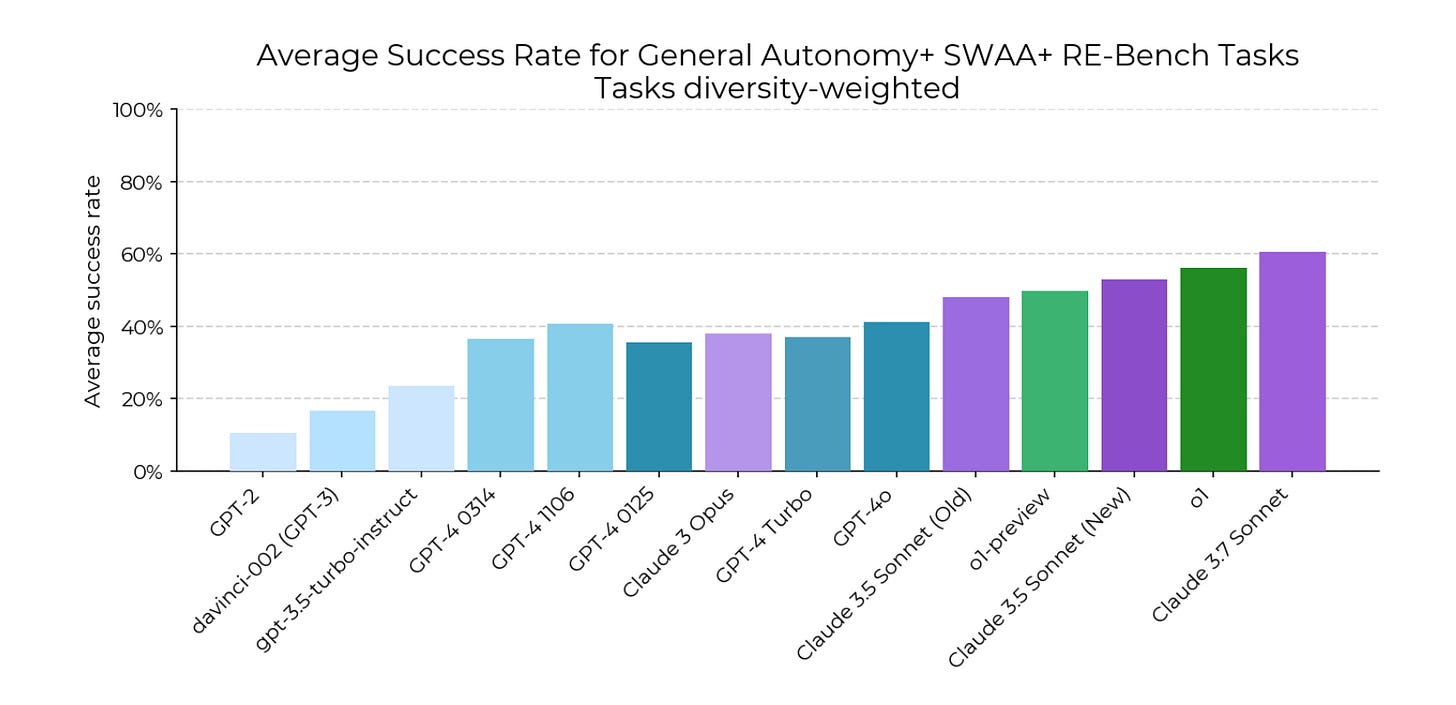

It’s not just attention spans that have increased, the quality of the output of these systems has also improved significantly (~38% success rate for GPT4 vs ~60% success rate for Claude 3.7 Sonnet on the benchmarks in the METR evaluation set).

The Architecture of Thought

These advances in reasoning and planning come on top of the already superhuman memorisation capabilities these systems have, allowing them to excel on tests like the MMLU (Massive Multitask Language Understanding) benchmark.

This benchmark measures the amount domain knowledge these systems are able to absorb from their training data and re-apply when prompted.

In short, newer AI systems not only know much more than you do, they are also able to concentrate on tasks for much longer than the average mushy human brain (mine included).

A good example is the progress of recent AI systems on SWE-bench, a test designed to measure the LLMs’s ability to understand, modify, and debug complex codebases.

These systems can now autonomously fix bugs in production-level software that would have stumped their predecessors just months ago.

And these improvements aren't happening by accident—or through a single theoretical advancement.

It’s rather been the realisation by AI labs across the world that the reasoning capabilities of AI systems will generate the greatest amount of practical value.

Whether this is because these AI systems need to be intelligible for their users (us humans), or whether this is truly the optimal path forward is beyond this post.

Suffice to say, most AI research labs are actively researching new ways to improve the reasoning capabilities of their AI systems:

Anthropic's Claude 3.7 Sonnet uses an advanced version of what they call "constitutional AI" with improved chain-of-thought processes that allow it to break down complex problems into manageable steps. Rather than simply pattern-matching, Claude can now generate multiple solution paths and evaluate them based on logical coherence and helpfulness for the human.

Google's Gemini 2.0 Thinking introduced a specialised cognitive architecture that separates fast intuitive responses from slower, more deliberate reasoning—mimicking human thought processes. This dual-process approach allows the model to solve problems that require both creative leaps and methodical analysis.

OpenAI's o3 has pioneered a technique they call "recursive self-improvement," where the model can critique and refine its own reasoning in multiple passes, leading to significantly better outcomes on complex tasks.

DeepSeek's R1 has made waves by achieving comparable reasoning abilities to much larger models while requiring significantly less computational power. Using a Mixture-of-Experts (MoE) architecture, DeepSeek has democratised access to sophisticated AI reasoning tools.

Looking Forward: The Half-Life of Human Intelligence

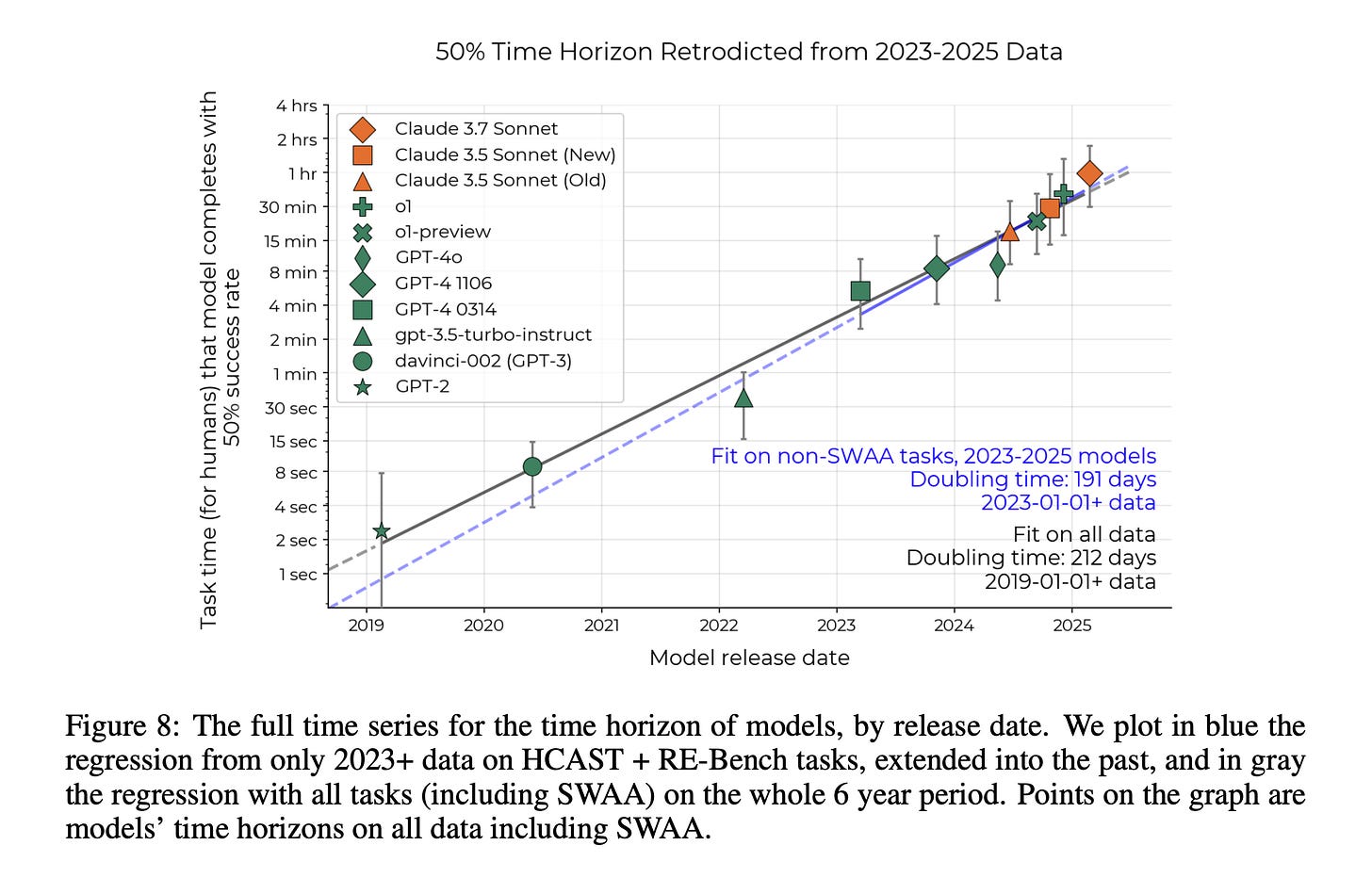

What is evident from all this is that the trend outlined by Kwa et al. in their research paper can probably be used as a Moore’s law for AI agents.

Based on the—admittedly limited—historical data, they predict a doubling of the amount of time an AI agent can operate autonomously every 7 months:

Based on this forecast and my experience with AI agents, by fall 2025 we’d have

Agentic AI outsourcing platforms that are commercially viable, enabling you to hire agentic AI systems to directly work on complex problems without or with minimal human intervention. Fiverr for example has already been experimenting with a “Personal AI Creation Model” they released mid-February.

Industry-specific reasoning models that combine general reasoning capabilities with deep vertical knowledge. These models will understand industry jargon, regulations, and best practices without additional training and can take on the role of AI consultants for that industry or domain.

More low-touch human-AI collaboration flows as software developers learn how to track AI system processes and limitations and are able to build the right UX to request human input only where needed, rather than the current systems which are too often still either failing silently or require constant supervision.

Better AI interpretability as mission-critical uses of AI systems require greater degrees of transparency than the current consumer and research applications. This means better UX, source attribution, grounding, and filters for reasoning, but also better insights into how AI systems come to specific conclusions.

So as you can see, exciting times ahead for those in AI, research, software or any knowledge profession.

The AI Automation Play

If you’re reading all this but are left wondering on how to actually get started with AI, agents, and automations I’ve got you covered—check out The AI Automation Playbook on Gumroad, in which I’ve bundled together 10 years of experience building AI automations in a beginner-friendly format:

PS. A quick way to save a few bucks on your purchase is by becoming a paid subscriber to The Circuit, in which case I’ll mail it to you for free! :)

This week in AI

Claude finally gets internet access: Anthropic has integrated real-time web search functionality into its AI assistant Claude. Initially available to paid US users, this feature enhances Claude's utility for tasks requiring recent data, narrowing the gap with competitors like ChatGPT and Google's Gemini. The upgrade addresses a long-standing limitation and is expected to improve Claude's performance in areas such as market analysis and deep research.

Google Gemini introduces Canvas and Audio Overview: Gemini's new Canvas feature offers real-time collaborative editing for documents and coding, enabling users to generate and refine content (like speeches or web apps) with AI assistance, while exporting to Google Docs. The Audio Overview tool transforms documents into podcast-style discussions, summarizing key points for on-the-go learning. Both features aim to streamline creative workflows and enhance productivity for professionals and students

NVIDIA open sources Groot N1: NVIDIA launched Groot N1, an open-source foundation model for humanoid robots, combining a dual-system architecture (planning and execution) trained on synthetic and real-world data. Designed for robots like Fourier GR-1, it enables tasks like object manipulation and multi-step operations, with customisable training via Hugging Face and GitHub. This marks a significant step in democratising advanced robotics development.