Building your AI Operating System

A framework for human-machine coordination

AI can never be human, because a core part of being human is knowing how to spot lies—not factual errors, not interpretation mistakes or simple misrepresentations—no, actual full-on deceit.

The ability to spot deceptions is a defining trait of human intelligence.

And AI systems famously s*ck at this—in a sense, they’re one massive deception, a feint or ruse that keeps us captivated because it looks so much like us.

As a philosophy graduate, things clicked into place this week reading Simler and Hanson’s 2018 book The elephant in the brain.

It resonated with Popper’s work on falsifiability, and completely reframed how I think about truth: not in a positive light (ἀλήθεια / aletheia — the absence of forgetting), but in a negative light, as the absence of lies.

In a sense the Turing test—seeing if a computer can convince us it’s human—says more about the human ability to spot deceptions than it does about anything else.

Turns out in 2025, we’re easily, haply fooled when it comes to technological artifacts.

And I hadn’t realized this until reading The elephant in the brain, but a key part of what’s been happening in AI is that we were already really good at self-deception to start with—and have been, for a very very long time.

This ability to deceive ourselves has made it easier for us to see these technological artifacts as extensions of ourselves, not in the least because that’s also how they’re presented to us by savvy AI marketers and product peeps. And because we see these AI systems as part of ourselves, we tend to ignore their inconsistencies and focus on their affirmations instead of any logic gaps that inevitably arise as we use them.

Self-deception—as Simler and Hanson write—is a key survival strategy for us humans.

They argue that human intelligence evolved in large part in an ongoing arms’ race against other humans. We got smarter to gain evolutionary advantages in the small bands of hunter-gatherers that were the center of our existence for hundreds of thousands of years.

Think about it.

What is the defining characteristic of most successful people—of our species’ apex?

Whether it’s a famous politician, a successful business person, an esteemed scientist or an admired actress, they all share one trait—unwavering confidence in themselves and their idea(l)s.

In other words, they are much better at deceiving themselves than the rest of us.

As Simler and Hanson argue, this is not a fluke—it’s a core function of consciousness:

… [through] self-deception … it’s possible for our brains to maintain a relatively accurate set of beliefs in systems tasked with evaluating potential actions, while keeping those accurate beliefs hidden from the systems (like consciousness) involved in managing social impressions.

—Simler & Hanson (2018), The elephant in the brain (p. 87)

Throw AI in the mix and things get pretty weird.

The brain-AI integration is already well underway

Neuralink and other brain-machine interfaces aren’t going to be patient zero when it comes to enmeshing AI into our reality—this dubious honor has been taken by the Claude boys and whoever uses ChatGPT as their therapist.

We humans are very adept at integrating different neural systems into our conscious being, so much so that it doesn’t really matter when one of those subsystems is in fact artificial—that it runs on silicon.

Right now it’s still possible to spot humans whose “press secretary”—the part of the brain responsible for explaining our actions—has been hijacked by an AI system because our societal norms don’t generally overlap with those of AI systems.

But as more people start using these tools, reality will be warped around them.

To go back to the passage above, traditionally AI has been mostly employed to evaluate potential actions using insights derived from statistical modeling.

Think of quantitative trading algorithms calculating countless possible steps ahead in order to find the best—most profitable—path forward. Or of your navigation system discounting all routing options until it finds the shortest path to your destination.

In contrast, these new AI systems—generative AI, ChatGPT & co—allow humans to do something completely different. They allow us to manage social impressions using an artifact. In a sense, it’s similar to the invention of clothing, or to Venetian masks.

These new “generative” AI capabilities have so far mostly been applied to manage the social impressions of non-human entities like organizations and events (marketing being one of the traditional early adopters of AI), but we’re probably on the cusp of a revolution in which these AI systems will start representing you personally—for better or for worse.

So is there nothing you can do?

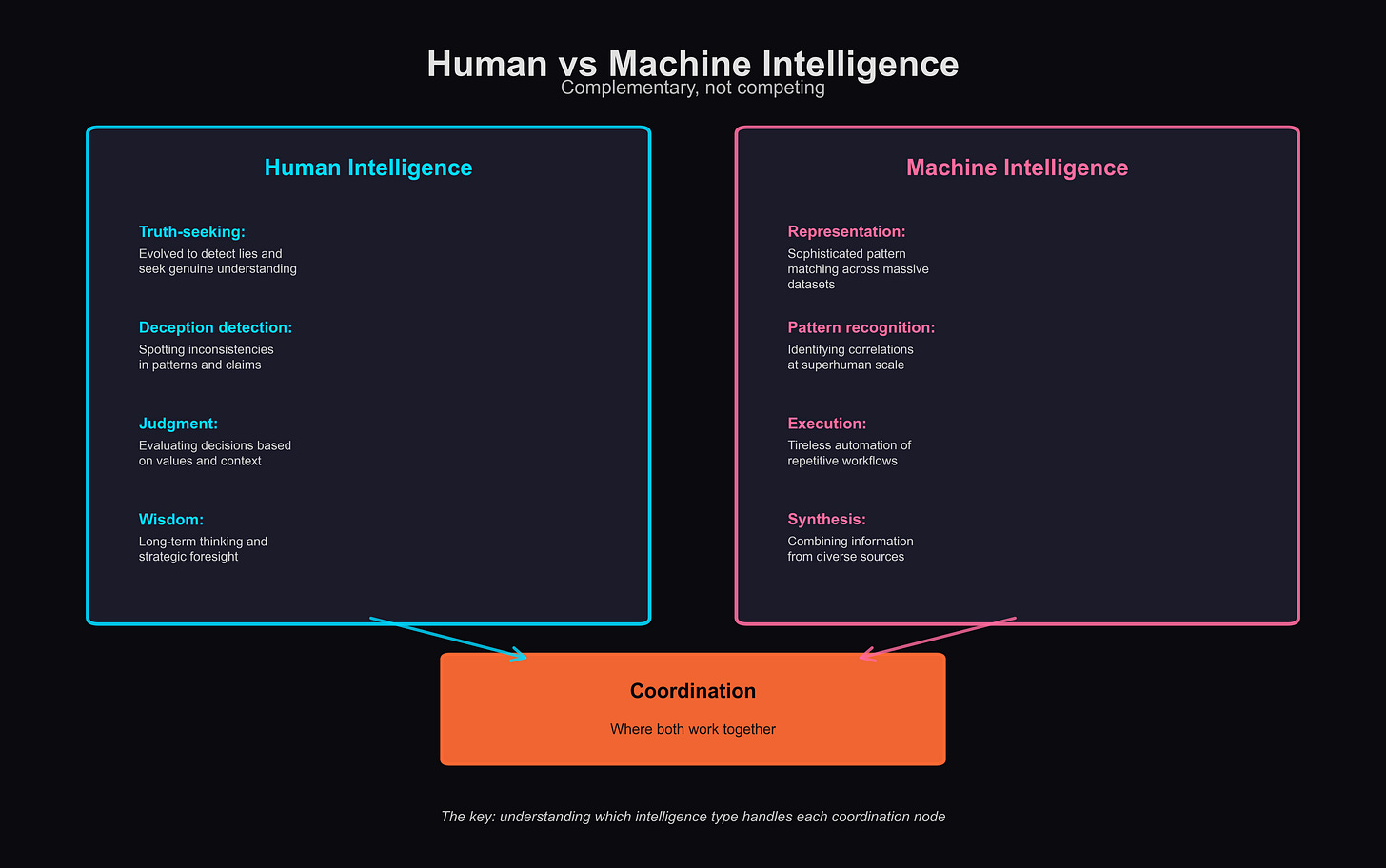

I’d like to think that there is, by being smart about what you outsource to AI.

Building your own AI Operating System

This means going through your AI usage rigorously and creating systems that work for you—rather than the other way around.

As the role of these AI systems grows in importance in your day-to-day (think AI agents planning your holidays, managing your bank accounts, running IT operations for your business and so on), it is crucial that you are able to ensure they share your values, achieve the objectives you care about, and don’t do anything you wouldn’t do.

In order to help you orchestrate your agents, I’ve put together a small curriculum that I’ll be sharing through one-on-one coaching to drive maximum impact.

I’ll also post a video with a complete overview of the program to my Youtube channel later this week, so be sure to subscribe to get notified when the video is released!

My goal with the program is to make sure you stay in control of these new subsystems.

The final pieces of the program have been falling into place these last few weeks reading Sangeet Choudary’s Reshuffle (2025). For a long time I’ve felt that the whole automation vs augmentation debate was too limited to represent what’s really going on in the world today, and Sangeet correctly points out that automation—doing the same tasks faster or more efficient—is not the right frame to look at the changes in our economic systems brought about by AI:

AI’s value lies less in automating tasks and more in enabling new architectures of coordination and decision-making.

—Sangeet Choudary (2025), Reshuffle (p. 96)

What Sangeet writes is spot-on—the way to extract maximum value from these advances in AI is by putting in place the right architectures of coordination and decision-making for your systems of work.

I can help you with that, since I’ve been building AI systems for more than ten years.

If you’re interested, reply to this email so we can schedule a follow-up.

To get a sneak preview, sign up for my free 5-day AI operator email course:

Last week in AI

OpenAI DevDay 2025 brought a wave of infrastructure for AI operations. AgentKit and Agent Builder launched with a visual canvas for multi-agent workflows—Sam Altman called it “Canva for agents.” More significantly, Apps in ChatGPT creates a new distribution channel reaching 800 million users, with an app marketplace and monetization coming later this year. And ChatGPT agent mode is now available for Pro, Plus, and Team users through the tools dropdown.

The AI workflow automation space exploded as n8n announced a $180M Series C at a $2.5B valuation, led by Accel with backing from NVIDIA’s venture arm. The company went from $350M to $2.5B valuation in just four months. This comes on the heels of their €55M Series B in March—the speed of growth here shows just how fast businesses are adopting AI automation.

Nathan Benaich released the State of AI 2025 report, revealing a watershed moment in enterprise adoption: 44% of U.S. businesses now pay for AI (up from 5% in 2023), with average contracts hitting $530K and 95% of practitioners using AI tools daily. The report highlights how reasoning became AI’s defining capability in 2025—systems can now plan, self-correct, and work over extended time horizons—while compute infrastructure emerged as the new battleground.

Week 41 AI-on-AI roundup

The AI industry is shifting from “AI as tool” to “AI as infrastructure layer”. Businesses are redesigning operations with AI as core substrate. The conversation has moved from “which model is best?” to “how do we architect human-AI coordination?” This manifested in new approaches to context engineering: Stanford’s ACE framework showing that it achieves 10.6% higher accuracy than traditional compression methods, enabling smaller models like DeepSeek-V3.1 to match GPT-4 performance.

Meanwhile, practitioners are developing workflows to preserve strategic thinking across AI conversations, and combat “AI slop” at scale. The infrastructure race intensified with Google proving established platforms can move with startup speed when AI becomes existential. With 60% of VC money now flowing to AI and startup AI spend replacing traffic metrics as the measure of value, we’re witnessing the moment AI stops being optional infrastructure and becomes the foundation on which modern businesses operate.

Jonas, I recently got a little I guess you can say disillusioned with AI because I was tricking myself into thinking that it just knew everything (I'm very early in my AI journey, forgive me). I could relate to what you wrote here. A lot of the stuff AI was telling me in conversations simply wasn't true, and my opinion on AI as a whole kind of fell like a house of cards overnight. I'm coming back to it now, though. It's probably an issue of how I prompt it, and also an issue of how much confidence I had in these models. I got a lot to learn still as well. Thanks for the great newsletter.

The hardest systems to build are the ones that stay honest when no one’s watching.