Same person, different context, different needs

Why AI coworkers still have a lot to learn

The chat era is ending.

The AI tools gaining traction today work differently.

You don’t chat with them—you delegate work to them.

Define the goal, assign the task, come back when it’s done.

Tools like Anthropic’s Cowork (released Jan 12th), Manus (acquired by Meta Dec. 29th 2025), and Devin are great examples of this new paradigm.

These tools are often marketed as “AI coworkers”, which is a helpful framing because it gives us a way to understand both their shortcomings and strengths.

Because like any new hire, the better your onboarding the faster they can deliver value.

And like any new hire, they will only be able to deliver outstanding work if they can complement the existing team.

This is where a lot of organizations go wrong.

They look at AI agents as smarter spreadsheets, and fail to take into account the people and process side of the equation.

This is wrong because unlike all previous generations of IT systems, agentic AI systems aren’t systems of record—they’re systems of action.

Which means these AI coworkers will inevitably run into the same issues everyone else on your team runs into: information gaps between systems, unclear guidelines, lack of corporate strategy, and the general ambivalence of any modern business environment.

Let’s look at how your human team members handle these issues.

Business rules aren’t really rules—they’re heuristics

Heuristics are the shorthands we humans formulate for situations we encounter regularly—for example, “at the fire pit, always sit upwind.”

These heuristics guide a lot of our business decisions.

In fact, there is even a theoretical framework built around these heuristics: Mischel and Shoda’s CAPS model (Cognitive-Affective Processing System).

Mischel and Shoda’s key insight is counterintuitive: people aren’t consistent in general—they have a tendency to behave consistently across similar contexts.

Take the fire pit example.

“Always sit upwind” sounds like the most common sense thing to do, but somehow most fire pits seating arrangements turn out to be quite evenly distributed.

There are two reasons for this.

First is that we humans are—if anything—adaptable.

Because even when you are one of those “always sit upwind” people, it is quite likely a change in context could lead you to change your behavior—for example,

You see someone really interesting you want to talk to sitting downwind.

It is freezing, so you decide to take the smoke to get closer to the heat.

You arrive late and the only open seat is downwind—to avoid having to ask everyone to shuffle.

The second reason is that these behavioral patterns are different from person to person.

So while some people would—all things being equal—prefer to stay smoke-free, others will default to “always sit next to the most interesting person” as a maxim.

Mischel called these stable if-then patterns “personality signatures.”

The formula is simple: if [situation], then [behavior].

They found that once you know someone’s signatures, their behavior becomes much more predictable.

In business, some examples of different situations leading to different behavior in the same person are:

What’s at stake if something goes wrong

Whether mistakes are reversible

How much tacit knowledge the task requires

Whether the output represents them personally

So what does this mean for onboarding your new AI coworker?

The good news is that AI agents are better suited to adapt than previous generations of information-processing systems because they are able to process much more of the business context. Unstructured data is no longer a black box for these systems.

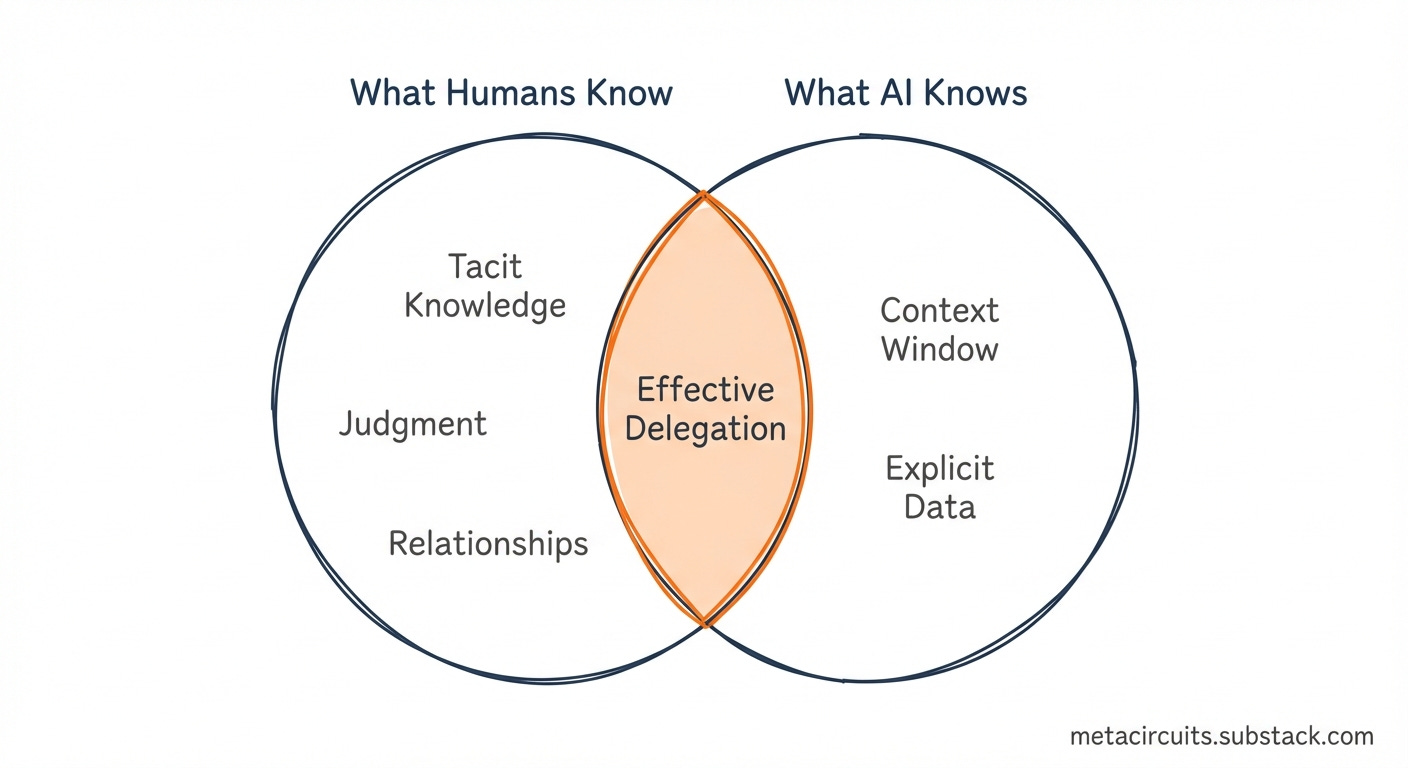

The bad news is that these AI systems don’t know nearly enough about your business context to make for effective coworkers.

Engineered systems miss tacit, implicit business knowledge

Which means you and your team will need to guide them.

Often what is captured in systems of records and automated processes in a business comprises just 10-15% of what is needed to make effective business decisions.

The push towards a data-driven enterprise notwithstanding, there is a reason why your headcount feels bloated right now—data coverage is patchy, data quality is questionable and the automated processes tying everything together still need to be built.

So you hire more people—account receivable specialists to check invoices that fall through the cracks, sales ops analysts to reconcile conflicting sales data, business analysts to figure out what the hell is going on in your business—to serve as the bridge between partial solutions and broken processes.

Trying to onboard an AI coworker in this environment is like bringing a smart fridge to a battlefield and hoping it somehow catches bullets.

In other words, you will need humans to steer these AI systems.

As I explained in a previous post, AI works great as the execution layer—with the right human supervision, it’s the 2026 equivalent of giving your team superpowers.

What it cannot do, though, is make sound business decisions on its own.

So your best bet is to leverage it where it excels—at processing information and executing information-processing tasks at superhuman speeds.

With the right human guidance and quality control, it can turn average performers into real weapons. Not to mention what it can do for your top performers.

Moving beyond skill-based assessments of AI tools

The next time you want to upgrade the information-processing capabilities in your business, rather than looking at individual features or capabilities, ask yourself these two questions:

Will it empower your current top performers?

Trust your top performers to have come up with the right heuristics to thrive in your business and the environment it operates in.

Rather than forcing them to change their ways of working—or, god forbid, using AI as a way to level up your under performers—make sure the AI coworkers you bring in complement your top performers.

These AI systems should help them excel in the work they’re currently doing, either through increased leverage or by addressing key weaknesses in your team.

As discussed above, when you are mapping out current workflows don’t just look at vendor demos of the happy path. Full automation of knowledge work is fool’s gold at this point in time—new AI model architectures are needed to make that happen.

Instead, focus on flexibility.

Take into account how the AI system would handle different business situations.

Can your operations head get detailed reasoning when she’s reviewing something risky, and just get it done when she’s clearing routine items? Can your sales lead have full control for key accounts and let automation handle the rest?

Watch for these tells:

Can users see why the AI did what it did? (Or is it a black box?)

Can users dial autonomy up or down based on the task?

Does it ever ask clarifying questions? (Or does it just confidently guess?)

Can users intervene at any stage? (Or is it all-or-nothing?)

Tools that force a single workflow on users will alienate your best people first.

The ones with the most context, the most judgment, the most valuable perspectives.

And talking from experience, one of the biggest drivers in the successful adoption of AI systems is whether or not users trust the system enough to want to work with it.

Trust in these systems takes a long time to build, and can be lost in seconds.

Does it actually learn your business, or just pretend to?

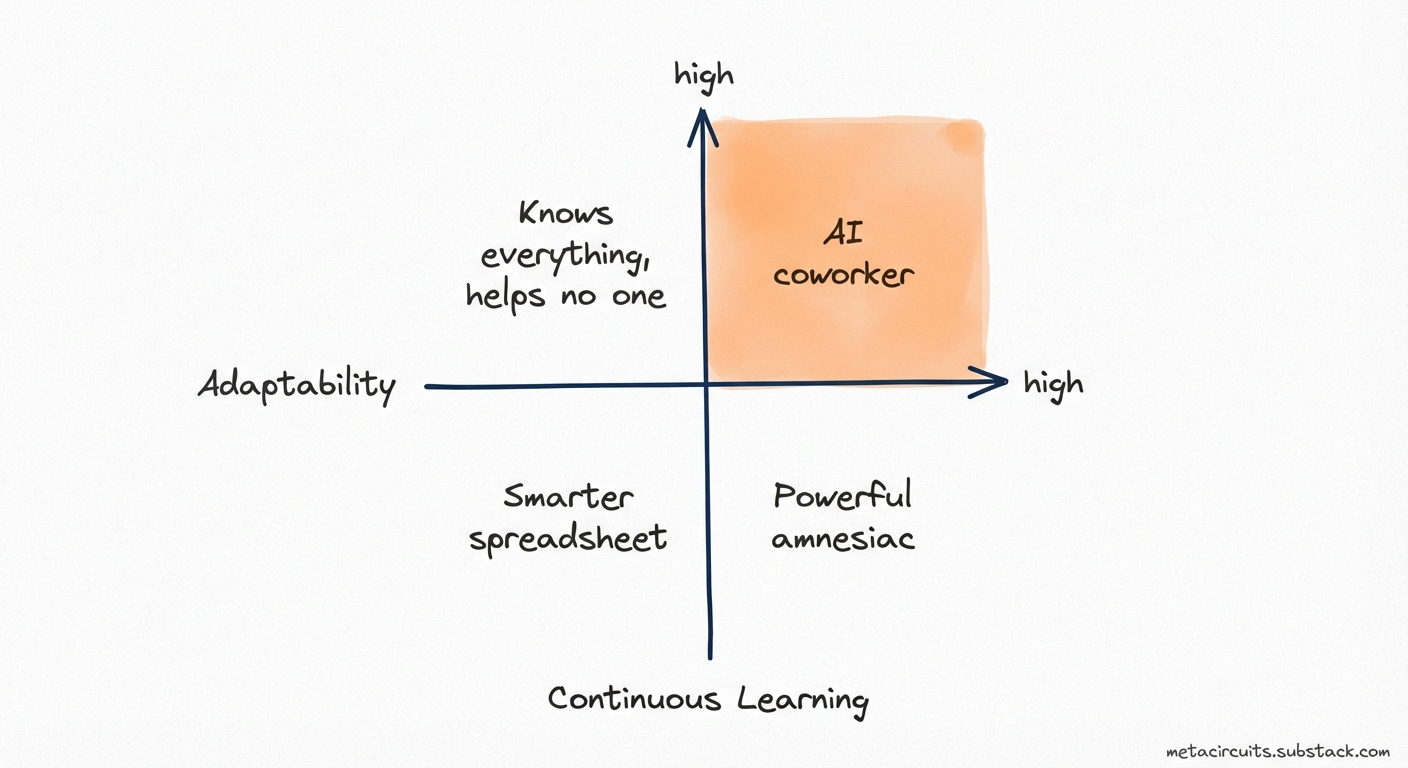

The other key thing to keep in mind is that most agentic AI tools out there today are basically amnesiac. They aren’t built to learn the heuristics that make people great.

This lack of business context—in technical terms, a lack of continuous learning—is what makes it absolutely necessary to have humans supervise even the most advanced AI coworkers in 2026.

Even as the delegation of tasks becomes the new way of working with AI systems, humans will need to continuously check outputs, set guardrails and give directions.

I do expect the next generation of AI coworkers will be better equipped to learn business rules and update their knowledge of the business.

They will need less and less explicit human input to get things done.

But right now in early 2026, your people will be the ones filling in that gap—re-explaining context in prompts, fixing outputs, and catching mistakes the AI would have avoided if it had a more complete understanding of your business.

Keep this in mind when you are evaluating your next AI purchase.

The less time your team spends writing out complex instructions, the more value the tool will bring. Even today, there are AI tools out there equipped to bridge this gap.

Look for:

How the tool connects to your actual systems and documents.

How it accumulates knowledge about your organization.

How it remembers what worked for your team.

… unless you want your team to spend the next 12 months crafting bigger and bigger prompts to make these systems work.

Same person, different context, different needs

To conclude, before buying in the latest shiny AI tool look to figure out what type of delegation you or your team would be comfortable with, and what behavioral patterns they default to in key business situations.

Map the if-then patterns that drive business outcomes for your top performers:

If it’s client-facing, then I verify

If it’s routine, then I let it run

If there’s ambiguity, then I take over

If it’s time-sensitive, then I want speed over control

If the tool can accommodate these patterns, you’ve potentially found yourself a winner.

If it can’t, you’ll get adoption from some people and friction from others—not because they’re resistant to change, but because the tool doesn’t fit how they work.

Want to dive deeper in a hands-on session? Reach out by replying to this email!

Last week in AI

Surprise surprise, OpenAI is bringing ads to ChatGPT. In a January 16 announcement, OpenAI revealed plans to begin testing advertisements in the coming weeks for US-based free and Go tier users. The “Sponsored Recommendations” will appear at the bottom of responses, clearly labeled and separated from organic answers. Paid subscriptions will remain ad-free.

Healthcare AI is heating up. Just days after OpenAI announced ChatGPT for Health at the J.P. Morgan Healthcare Conference, Anthropic fired back with Claude for Healthcare on January 11. US subscribers on Pro and Max plans can now connect their health records via HealthEx and Function, with Apple Health and Android Health Connect integrations rolling out.

NVIDIA and Eli Lilly are betting $1 billion on AI-powered drug discovery. The pharma giant and chip maker announced a first-of-its-kind co-innovation lab on January 12 at J.P. Morgan, promising to co-locate Lilly scientists with NVIDIA AI engineers in San Francisco. Built on NVIDIA’s BioNeMo platform and upcoming Vera Rubin architecture, the lab aims to create a “continuous learning system” connecting wet labs and computational work around the clock.

Malaysia and Indonesia became the first countries to block Grok. On January 11-12, both nations temporarily restricted access to the AI chatbot after authorities determined it lacked safeguards against generating non-consensual sexual deepfakes. Indonesia’s Communication minister called such practices “a serious violation of human rights, dignity, and citizens’ security in the digital space.”

One announcement from CES that flew under the radar but could become a really big deal is the partnership between Boston Dynamics and Google DeepMind to bring Gemini to humanoid robots announced on Jan 5th. The partnership will integrate DeepMind’s Gemini Robotics foundation models into Boston Dynamics’ commercial Atlas humanoid. The robots are destined for Hyundai auto factories, with all 2026 deployments already committed.

I believe we still have a ways to go before AI cowork will be a real thing. It's cool to have an assistant, but for now that's all it is. I wouldn't delegate important work without close supervision.

Didn't know about Devin - off to Research them now 🤔