4 Lessons in AI Product Design From Microsoft Copilot

Patterns and anti-patterns for AI integrations

One of the truisms I repeat the most at my clients is that AI products are only as valuable as the data integrations they enable for their users.

Microsoft seems hell-bent on proving me wrong.

Whenever I interact with one of the products in the Copilot family it feels as if they’ve succeeded in doing a disservice to both their customer base and the AI community.

This review on Reddit neatly sums up my experiences with Microsoft Copilot:

… I swear: I’m not just being negative. I want CoPilot to be good. I need it to be good.

But right now:

Yes, it's connected, but it’s not coherent.

It’s embedded into Office apps but doesn’t seem to know which version or language those apps are running in.

The UX is wildly inconsistent between apps.

It’s slow. Painfully slow to load or switch contexts.

You get generic error messages that tell you nothing.

Here’s one example:

I updated to a new version of Outlook and couldn’t find where to set my "Out of Office". So I opened CoPilot within Outlook and asked the question in Dutch. It replied with a step-by-step guide, in Dutch, great. But none of the buttons mentioned existed in my layout.

I mention this. CoPilot then correctly gives me steps for the new layout. I tell it my menu is in English. It then gives me steps in English… for the old layout. 🤦♂️

So I figured, maybe there are some lessons in AI product design to be learnt here?

Lesson #1: Meet users where they are

It’s the same mistake made in a lot of software products, AI and non-AI capable:

Treating every user the same—by failing to decide who your users are.

This creates software that’s either too simple for power users or too complex for novices. Microsoft’s product teams have historically struggled with making hard decisions, and this shines through once again in their AI integrations.

For AI products, a great way to think about user levels is through the lens of agency—with a concept known as the autonomy slider:

Do you want your AI to run on autopilot, or do you want fine-grained controls?

This will depend as much on personal preferences and the level of expertise of the user with the software, the task and AI general, as with what the AI is tasked to do.

Microsoft Copilot 365 nailed it in their Teams integration: meeting transcription, notes and summaries run on autopilot (you can only choose to turn them on or off), and the feature has received pretty much universally positive user reviews.

The Teams AI features seamlessly integrate with existing workflows and automate tasks that were perceived a chore (taking meeting notes and making summaries).

Their Copilot 365 Powerpoint, Excel and Word integrations on the other hand are an hot flaming mess. Here’s another complaint from the same Reddit thread:

It's just awful for me so far … It can't even send me a summary of emails from the past 7 days - it consistently pulls results that are months old.

CoPilot in Excel can't even generate a simple spreadsheet - I've had far better luck with ChatGPT. CoPilot will TELL you how to do it, but doesn't actually do it.

CoPilot in Powerpoint is pretty decent at turning outlines into a basic presentation, but not much beyond that.

CoPilot in Word is just disappointing. Again, much better results generating base documents with ChatGPT and importing them and fixing the formatting.

—Source: Reddit, Copilot review from 3 months ago.

Pretty much all Copilot 365 reviews of users that have used non-Microsoft AI tools like ChatGPT, Claude, or Perplexity are overwhelmingly negative.

It goes to show that in order to meet users where they are, you need to look beyond simple app usage data. You need to understand user expectations, factor in different skill levels, map user workflows beyond the scope of the AI integration, and make sure that what the AI generates is actually perceived as helpful by the end users.

The Microsoft product teams that built these Copilot features skipped this stage and jumped straight into building features. If I had to guess, the teams Microsoft tasked with building Copilot were the same teams already working on these Microsoft 365 products, and this is the first time any of them built AI features (

?).This sorry state of affairs is still very much reality more than a year after Mustafa Suleyman started his tenure as CEO of Microsoft AI. And even a brilliant thinker like Satya Nadella cannot sustainably keep driving up shares price on press releases alone—at some point their AI product teams need to start delivering.

Lesson #2: Context is key

Data context, user context and business context.

A lot of startups funded by Sillicon Valley’s YC program these days are building AI products around the premise that context is key—so-called “vertical” AI startup that are looking automate industry workflows with AI agents.

These AI startups are betting billions of dollars of VC money on the hypothesis that they can provide enough data and business context to their AI agents to let them outperform (or at-perform) humans operating in the same business environments.

One key issue for these vertical AI startups is that a lot of business processes and data contexts are different from company to company and industry to industry. Most of them have been struggling to gain any type of significant foothold so far in 2025 (“the year of the AI agent”), bar a few exceptions like Clay.com.

A good example of an application domain where setting the right context makes all the difference is AI coding. Both Microsoft’s Github Copilot and Cursor IDE started out as extensions of existing developer environments—existing user contexts—and gradually expanded the scope and reach of their AI integrations—the data context—as the AI systems they integrated with became more capable.

By working in a phased approach and a fixed, well-understood user context they were able to ensure backwards compatibility with user workflows as they gradually let their AI agents take over more and more of said workflow.

A downside of this approach is that when they do release changes that break user context, like the Cursor team did with a release in February of this year, they can get quite a bit of backlash from their user communities.

I glossed over it a bit, but a good AI integration of course starts with nailing the data context: making sure your AI systems have access to the right data at the right time when fulfilling a user request.

This is core of what has made Cursor such a huge financial a success. Michael Truell discussed some of the work they do on this front here on

’s podcast:Lesson #3: Design for failure

Balancing the generative capabilities of the AI systems with the human capacity for review is key to building successful AI products. How and when you offer the output of your AI systems up for human review is your key design decision: the only one that really matters for an AI product.

At the same time, because generative AI systems are open-ended the number of failure modes that can arise during usage is theoretically infinite—which makes it really hard for AI product builders to predict, prevent and handle errors.

And as AI systems become more powerful, the impact of mistakes will also increase.

One great design innovation I’ve seen implemented across a number AI products recently is the introduction of error-correction loops for AI agents. AI product builders have started giving their AI agents the diagnostic tools needed to make sure these agents are working on the right thing.

Which diagnostic tools are relevant is of course dependent on the type of task the AI is working on, but at the core of them all is feedback flowing back directly to the agentic AI systems. This feedback allows agentic systems to keep working on tasks longer without human input, thus enabling them to pick up more complex tasks.

A natural consequence of—and key design goal for—these error-correction loops is that they reduce the number of times humans review the work of AI systems.

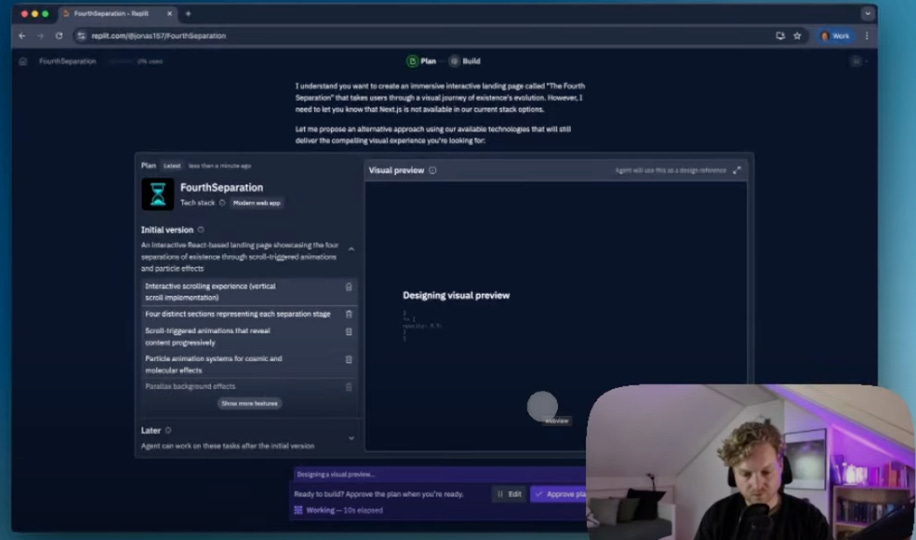

This means that as an AI product designer, you need to be even more intentional about when and how you ask for human input. I really like how this is done in Replit, where they quickly generate a visual preview of the app their agents will generate before their agents start working on the implementation in code.

This small user feedback loop allows users to provide feedback in seconds, rather than having to wait ten minutes or more for the agents to finish generating a working app.

Another way of thinking about this is to deliberately and intentionally de-risk AI usage for your user with smart design choices—to minimise the amount of time and/or money your AI systems and agents spend generating the wrong things.

As for Microsoft, asking for user feedback was never their thing.

Copilot output does come with thumbs up and thumbs down, though…

Lessons #4: Avoid overreach

Even though AI systems are becoming ever more capable, you should design your AI products to work within the limitations of the AI systems available to you today.

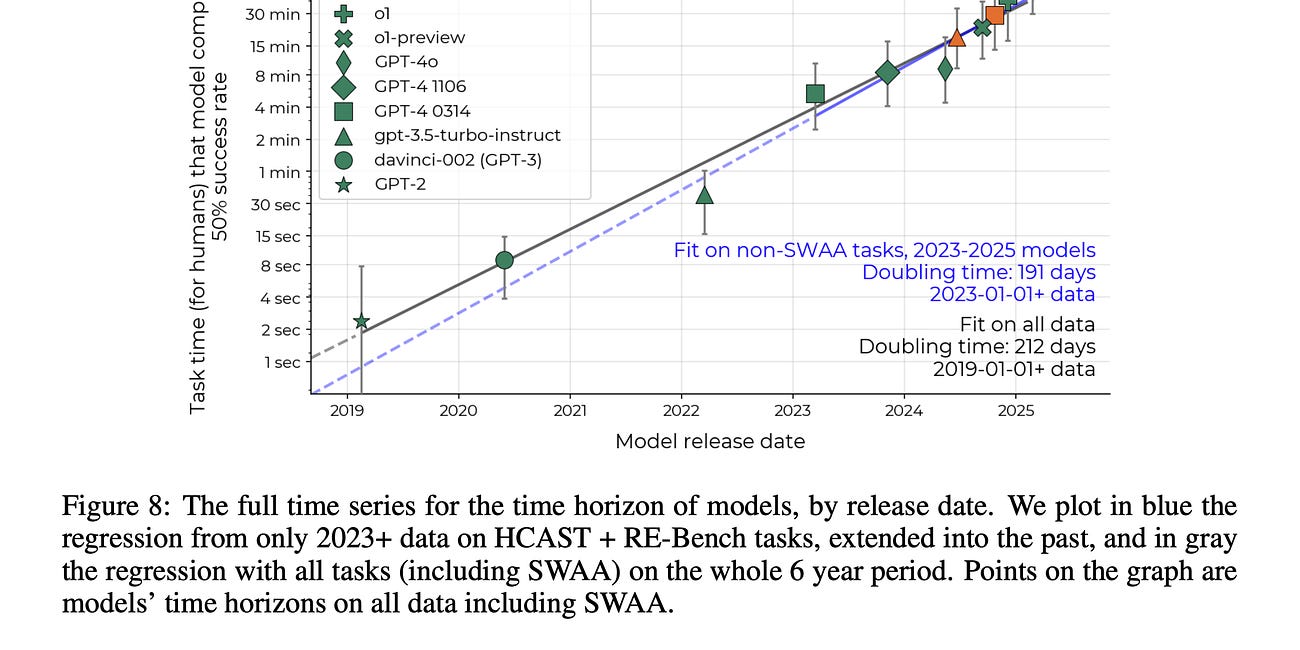

Plotting The AI Agent Growth Curve

It’s funny how I’m using deep research in Perplexity Pro on a daily basis, even though two months ago “deep research” didn’t exist in any consumer product.

This type of AI overreach usually shows itself in AI products where developers hope for the best and pray that “AI will do its magic”. Lack of design choices, guardrails and task-specific workflows are—in my opinion—signs of poor product leadership.

User value doesn’t come from AI products half-assing a million different things.

It comes from the AI product doing what the user expects it to do—from the AI product doing what it was designed to do. Anything else, and you’re bound to miss the mark.

This usually means starting from single, well-defined tasks and processes and automating those to and beyond the level at which humans do them, rather than building something vague, “general purpose” but ultimately useless. I argued something similar in my review of ChatGPT two weeks ago.

If you don’t, your AI product might end up getting reviews like these:

The base model for MS Copilot Studio is a unbelievably stupid, lobotomized Chat-GPT.

Unfortunately for the poor souls stuck in companies that bought into the Microsoft Copilot AI productivity shtick, it means for now at least they are stuck with generic tooling that does nothing well because it was never designed to do anything useful other than “be AI”.

Let’s hope Mustafa gets his shit together at some point in the near future.

Last week in AI

Google is rolling out Gemini 2.5 "Deep Think" to Ultra users. “Deep Think” is their AI system designed for complex, multi-step reasoning tasks—the one that won a gold medal at the International Math Olympiad recently. It is best suited to help deliver comprehensive answers for challenges like business strategy or scientific analysis.

OpenAI launched "Study Mode" for ChatGPT, a new feature designed to act as a personalized learning assistant. Users can generate study plans, create quizzes from their documents, and get simplified explanations of complex topics.

Google's AI research assistant, NotebookLM, can now generate short video overviews from your documents, providing a quick, multimedia summary of key information. This "Video Overviews" feature is part of a broader "Studio" upgrade that enhances the tool's ability to synthesize your source materials into assets like briefing documents or FAQs.

Microsoft is rolling out "Copilot Mode" for its Edge browser, aiming to seamlessly integrate AI assistance directly into your web browsing workflow. This mode offers proactive summaries, suggestions, and context-aware help as you navigate the web, reducing the need to open a separate AI chat. It's designed to make online research and tasks faster and more intuitive.

Alibaba’s AI video research group released Wan 2.2, a high-quality open source video generation model. You can download the model weights from wan.video or HuggingFace. Running the model requires specialised hardware, so even though the model is free to use generating video will probably still cost you money.

Anthropic upgraded Claude Code with the ability to use "sub-agents". Sub-agents allows a primary Claude model to delegate specific tasks to other specialized tools or AI models, acting as an orchestrator to solve complex multi-step problems.

Collaborative design platform Figma has successfully gone public, reaching a remarkable $19.3 billion valuation on its first day of trading. This major IPO underscores the immense value of cloud-based collaboration tools, which are increasingly enhanced with AI features to streamline creative and business workflows.

Here for this. Your point about bolt-ons vs. real, workflow-native AI hits home. I’m running an “A Feature a Day” to ship one improvement every day using AI, and need to put these lessons into action. I was trying to force Copilot for a while and fell back on Claude Code because Copilot's main value for me was auto-complete and code reviews, which I can get elsewhere for the same or better outcomes.